17 Apr Heterogeneous Caption/Audio Sync Correction – A Novel Approach in CapMate®

18 Mar Heterogeneous Caption/Audio Sync Correction – A Novel Approach in CapMate®

In today’s globalized media landscape, delivering content that transcends language barriers is paramount. Captions and subtitles are essential for making content accessible to diverse audiences. However, ensuring these captions are perfectly synchronized with the audio—especially when they are in different languages—presents unique challenges. This article delves into the complexities of heterogeneous caption/audio synchronization and introduces CapMate’s innovative approach to addressing this issue.

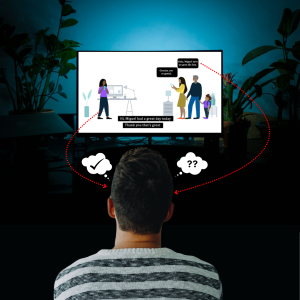

What is Caption/Audio Sync Issue?

Caption/audio synchronization refers to the precise alignment of captions with the corresponding audio or spoken dialogue in video content. When captions are out of sync—either appearing too early, too late, or mismatched with the spoken words—it disrupts the viewing experience, leading to confusion, reduced comprehension, and frustration for the audience. Sync issues can significantly undermine the accessibility and inclusivity that captions aim to provide.

What is a Heterogeneous Sync Issue?

In the realm of media localization and accessibility, a unique synchronization challenge arises when the audio track and its corresponding captions or subtitles are in different languages—a scenario known as a “heterogeneous sync issue.” Unlike the relatively straightforward task of aligning captions with audio in the same language (homogeneous sync), heterogeneous sync involves aligning translated text with the original audio.

This complexity stems from linguistic differences, such as varying sentence structures and lengths, as well as timing disparities between the source and target languages. For example, a video with English audio and Spanish subtitles demands precise synchronization to ensure the captions match the intent and flow of the original dialogue. Addressing these issues is critical for delivering an accurate and comprehensible viewing experience to a global audience.

Why is this Requirement Common?

With the proliferation of global content distribution, media is increasingly consumed by audiences who speak different languages. To cater to these diverse audiences, content creators often provide captions or subtitles in multiple languages. Ensuring these multilingual captions are accurately synchronized with the original audio is crucial for effective communication and viewer satisfaction. Without proper synchronization, even the best translations can fail to engage audiences or convey the intended meaning.

Challenges in Detection and Correction

Detecting and correcting heterogeneous sync issues presents a range of challenges, particularly when viewed through the lens of user roles and capabilities. These challenges can be grouped into three distinct scenarios:

Scenario 1: User Does Not Understand Either Language (Media or Caption):

Users who lack proficiency in both the audio and caption languages face a nearly insurmountable challenge in detecting and correcting sync offsets manually. Without contextual understanding, they are unable to identify or quantify misalignments, making traditional manual methods ineffective.

Scenario 2: User Understands One Language (Either Media or Caption):

Users who understand either the audio or the caption language may attempt to identify sync issues by leveraging translation tools or analysing contextual clues. However, this process is both time-intensive and error-prone. It requires substantial effort to detect offsets, let alone implement corrections, particularly for nuanced issues such as drift or segment-wise misalignment.

Scenario 3: User Understands Both Languages (Media and Caption):

Even for users fluent in both languages, the challenge remains significant. Detecting and quantifying sync offsets—such as progressive drift (where discrepancies grow over time due to errors like timecode mismatches) or segment-wise misalignment (arising from edited or stitched content)—is a complex and time-consuming task. Manual tools often lack the precision and efficiency required to address these issues, making corrections impractical at scale.

These scenarios underscore the need for advanced tools capable of automating detection and correction processes for drift and segment-wise sync offsets, ensuring accuracy and efficiency.

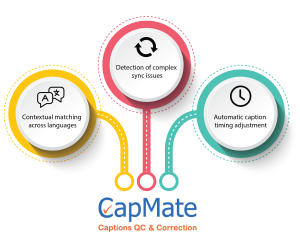

Our Approach in CapMate®

CapMate offers an innovative solution to address the intricate challenges of heterogeneous caption/audio synchronization. By leveraging advanced machine learning algorithms and large language models (LLMs), CapMate brings a new level of precision and automation to the process.

1. Detection and Correction of Complex Sync Issues:

The system is designed to handle both drift-type offsets (where misalignment increases progressively as the media progresses) and segment-wise offsets (where different segments have varying degrees of misalignment). Using sophisticated algorithms, CapMate quantifies the exact offsets and pinpoints areas requiring correction.

2. Automatic Caption Timing Adjustment:

At the end of the analysis, CapMate generates an auto-corrected caption file, ensuring that pervasive issues like drift and segment-wise sync offsets are fully resolved. This eliminates the need for extensive manual intervention, saving significant time and effort.

Time Savings and Accuracy Indications

CapMate’s automated capabilities result in substantial time savings for users and operators. Tasks that previously required days of manual effort—particularly in the case of heterogeneous sync issues—can now be completed in a fraction of the time. For instance, detecting and correcting complex sync issues in a 1-hour video might take several hours or days to manually address the heterogeneous sync cases due to the added complexity of translation and linguistic differences. With CapMate, the same task can be accomplished in minutes, regardless of the language pair.

1. Caption Quality Score:

CapMate provides a “Caption Quality Score,” a quantitative measure of the caption file’s quality based on the severity and number of defects present. This score allows users to gauge the overall quality of the captions quickly and make informed decisions.

2. Error Metrics:

The tool also provides detailed counts of errors in both the original caption file and the auto-corrected version. By highlighting the improvements made, CapMate ensures transparency and builds confidence in its automated correction process.

Conclusion

As the demand for multilingual content continues to rise, the need for accurate caption/audio synchronization across languages has never been more critical. Heterogeneous sync issues, once a daunting challenge for operators, are now addressed effectively by CapMate’s innovative approach. By leveraging cutting-edge AI/ML technologies, CapMate not only simplifies the detection and correction process but also delivers superior accuracy and time efficiency. For content creators and service providers striving to deliver high-quality, accessible media, CapMate represents a game-changing solution.