12 Jun How to detect & manage Audio Profanity in your content efficiently?

12 Jun How to detect & manage Audio Profanity in your content efficiently?

Audio profanity is the presence of foul, curse, or offensive words or phrases in the content delivered to the viewers. This applies to various content genres such as entertainment, news, documentaries, etc.

Profane language in your content can have several negative implications, both from a societal and regulatory perspectives.

Societal Impact

Profanity in your content can negatively influence viewers, especially younger audiences. Exposure to such language can contribute to normalising offensive speech and potentially lead to inappropriate behaviour or language adoption. For children and adolescents in critical stages of social and linguistic development, regular exposure to profanity can undermine parental efforts to teach respectful communication and appropriate behaviour.

Moreover, profane language can be offensive to the viewers, regardless of age, based on cultural, religious, or personal values. This can lead to discomfort, alienation, and a decrease in the overall enjoyment of the content.

For content creators and distributors, the inclusion of profanity may result in losing viewership from these offended audiences, impacting their reach and reputation.

Regulatory and Compliance Issues

Regulatory bodies and organizations such as the Federal Communications Commission (FCC) in the United States, impose strict guidelines and regulations concerning the use of profanity in broadcast and streaming media. Failure to comply with these regulations can result in significant fines, legal actions, and damage to the credibility of the content creator/distributor.

Additionally, content rating systems, such as the Motion Picture Association (MPA) film rating system and the TV Parental Guidelines, are influenced by profane language. Content with high levels of profanity may receive more restrictive ratings, limiting the audience and distribution channels. This can affect the commercial viability of your content, as poor ratings can restrict advertising opportunities and platform availability. That’s why in November 2022, YouTube updated its profanity guidelines to lump all curse words together. If any foul words appeared in the first seven seconds of the video or were uttered “consistently,” according to the regulations, that video could be demonetized.

So, what should content providers do to avoid these negative implications?

- Extract the list of all profane words/phrases from their content along with their location and submit before delivering to the station.

- For delivery to locations where such words are not allowed, beep/mute the locations where profane words are used.

- Issue a proper advisory with the content

However, it’s easier said than done! Let’s see why!

Why is it tedious to detect audio profanity manually?

Since detecting and logging profane words is a regulatory requirement, achieving high accuracy is crucial. This typically necessitates an operator meticulously watching the content in real time. When a profane segment is identified, the operator must replay the segment multiple times to accurately log the specific words or phrases and their precise locations. This process is labour-intensive and time-consuming for even a single delivery.

Consider a scenario where content previously tested for profanity needs to be delivered to a new country with additional profanity regulations not covered in the initial review. The operator must manually redo the entire profanity check to meet the new standards. This manual process is costly in terms of time and resources and becomes increasingly impractical and unsustainable as the content volume grows.

How can Quasar help?

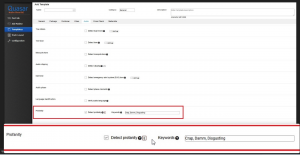

Quasar® can automate profanity detection, eliminating the need for manual intervention. Simply provide a customizable list of words and phrases you consider profane, and Quasar will handle the rest.

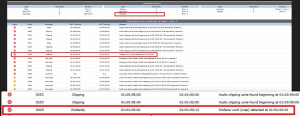

Quasar scans the content and reports all profane instances with their start & end times. Actual profane content is also reported along with the locations.

There are two ways to review the profanity results:

- XML/PDF Reports: These reports are generated for every QC job in Quasar. Each report contains a detailed list of all violations, including start and end time codes. Users can utilize this information to review the flagged content in the editor of their choice.

- QCtudio™: QCtudio™ offers an interactive system for reviewing results, providing audio/video playback for all alerts raised by Quasar. Users can navigate directly to alert locations and manually review these instances. Additionally, users can add review comments to various alerts and collaborate with team members to agree on action items. Alerts with user comments can be exported in a timeline file, which can be imported into major editing solutions. This allows for edits such as adding beeps or muting directly within the editing system.

Conclusion

Manual spotting and validation of audio profanity are prohibitive in terms of time and resources. This fully manual approach is simply not scalable and is also prone to manual errors. Moreover, re-validation of the same content for a new delivery further makes it unviable. Using automated detection and validation allows you to spot all violations efficiently and quickly. Using QCtudio to review the results further increases workflow efficiency by allowing you to make faster decisions in a collaborative environment.

Automation is necessary in the current fast-evolving technology landscape. With Quasar, you can achieve automation with reliability.